Executive Summary

This post is the second in a series that uses the history and economics of the American semiconductor industry to ask big picture questions about the future of fiscal policy and industrial policy. As the pandemic ends, the US will have a historic opportunity to revamp its public and economic infrastructure. However, to ensure that industrial policy is effective, many older strategies need to be updated to ensure that they are consistent with the suite of macroeconomic policy settings that support tight labor markets. Today’s post argues that the history of semiconductor manufacturing offers clear lessons for using industrial policy not just in resolving the present shortage, but in building a robust innovative ecosystem to secure the technological frontier for the long term.

While this is a history of the semiconductor industry, the policy takeaways it highlights hold for a wide range of industries. First, fiscal mechanisms play a crucial role in providing liquidity and mitigating financial uncertainty for highly uncertain sectors operating at the economy’s technological frontier. At the same time, industrial policy which inculcates robust supply chains through the reduplication of investment and employment plays a central role in gaining and holding the technological frontier. Science policy — the coordination of R&D undertaken by universities, private companies and public-private partnerships — is not enough. Finally, policy ambition is critical. Though bipartisanship is important, the scale of industrial policy must be such that it is able to achieve its goals.

Semiconductors and Industrial Policy

In our previous piece, we explained how failure to manage aggregate demand led to a stagnation in investment, employment, and output, using the semiconductor industry as a prime example. We also showed howany plan to prevent future shortages would require support for demand in addition to sector-specific supply incentives, if it is to be effective over the long term. Today, we take a wider view, and show how the government guided the early years of semiconductor production using a mixture of supply incentives, demand supports and regulatory coordination to create a robust and innovation-focused competitive ecosystem.

Industrial policy played a key role in the development of the semiconductor industry. Early industrial policy provided roles for a variety of participants: small firms experimented at the technological frontier, while large firms pursued process improvements to ensure those innovations scaled up. Government demand ensured that experimentation was financially feasible, while technology transfer regulations ensured that advancements were shared between large and small firms. Critically, regular purchasing provided the liquidity necessary for firms to continue iterating without relying on large-scale one-off products. This approach to industrial policy encouraged innovation by ensuring that small firms had access to domestic production at scale for innovative designs, while allowing larger firms to reap the benefits of producing these innovative designs at scale.

As the industry matured and the competitive environment changed, the policy framework shifted as well. Since the 1970s, industrial policy has been incrementally replaced by a capital-light “science policy” strategy, while mammoth “champion firms” and asset-light innovators have replaced a robust ecosystem of small and large production-focused firms. While this strategy was initially successful, it has created a fragile system. Today, the industry is constrained on one side by fragile supply chains narrowly tailored to the needs of a few firms with enormous investment moats, and on the other side by the many asset-light design firms who are unable to generate or capture process improvements.

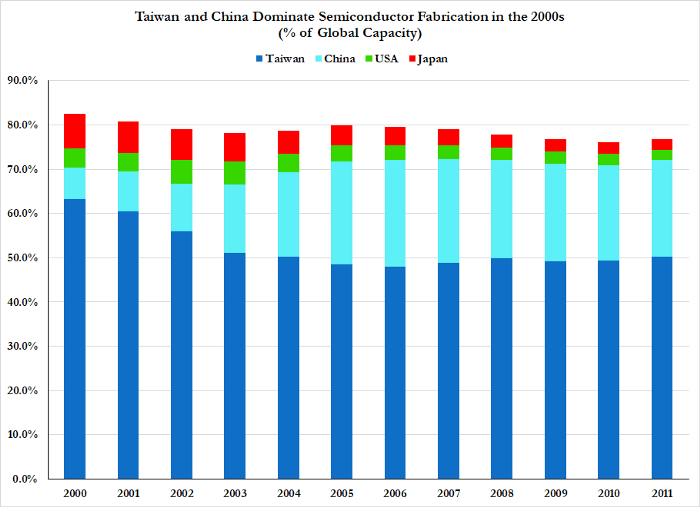

While the US semiconductor industry regained dominance in the 1990s, today — as a consequence of that policy approach — the US industry’s technological and commercial advantages are more fragile than before. With TSMC’s rise over Intel, the US has already lost the technological frontier and US firms face critical supply bottlenecks. Fissures in the supply chain exposed by the pandemic show that — given their status as a general purpose technology with a role to play in almost every major supply chain — semiconductor production is a crucial economic and national security concern. While science policy clearly has a role to play, it can take a narrow view of the process of technological advance, favoring the development of new ideas over the diffusion of new techniques into the capital stock. Process innovation is a hands-on practice, and requires the consistent buildout and implementation of new production lines. Learning-by-doing cannot be easily simulated in a low-capex asset-light production environment.

Technological innovation occurs across every part of the supply chain, and benefits from a diverse array of players and a dynamic labor market. Labor is not just a cost center at the technological frontier, but rather, a critical input to the innovative process. Policymakers should be cognizant of the lessons from semiconductor industrial policy when addressing the present shortage, and work to create the kind of robust competitive ecosystem needed to spur innovation. We will illustrate how the history of semiconductor policy shows the path for policymakers to pursue strategies that regain the US’s technological edge while creating a more secure and resilient supply chain.

Early Days and Industrial Policy

At the industry’s inception, the US government helped foment a diverse ecology of semiconductor firms using both industrial policy and science policy, to ensure that any scientifically viable approach was also economically viable. Fiscal spending provided the necessary liquidity to get this highly speculative industry off the ground. This strategy required consistent intervention to maintain an innovative and vibrant competitive ecosystem.

The Department of Defense (DoD) used purchasing agreements and quasi-regulatory measures to ensure an ecosystem of firms and wide dispersion of technological advances. Government contracts created a ready market for early firms, and the DoD was eager to play the role of first customer. With assurance that there would be demand for large-scale production of semiconductors, capacity investment became financially viable for many small, early firms.

As a central customer for many firms, the DoD had a clear view on the most recent technological developments in the industry and used this view to directly facilitate conversation and knowledge sharing between firms and researchers. At the same time, “second source” contracts, which required that any chips purchased by the DoD would be produced by a minimum of two firms, linked procurement to technology transfer. The DoD even required Bell Labs and other large-scale R&D departments to publish technical details and widely license their technology, to ensure that the building blocks of innovation were available to all firms the DoD would potentially contract with.

This system led to an accelerated pace of innovation that quickly spread throughout the entire sector. Government purchasing agreements ensured that investors were willing to spend, and that increased spending on reduplicated capital goods helped create significant process improvements. At the same time, workers moved freely throughout the system, applying knowledge gained at one firm to improve the production process of others.

This competitive environment — in combination with the era’s approach to anti-trust — encouraged the development of large research labs at large firms, and wild experimentation at smaller firms. Successful experiments helped create new large firms, or were scaled by already-existing large firms. Industrial guidance from the DoD helped push the technology in new directions while keeping industry capacity coherent and targeted. Crucially, this strategy implicitly privileged development of new techniques by the sector as a whole over maximizing revenue or minimizing costs for any individual firm. If firms needed to invest in and hold capital goods, financing was available. The government protected the sector from so-called “market discipline” so that the focus could remain on innovation and production, rather than narrowly-construed economic success.

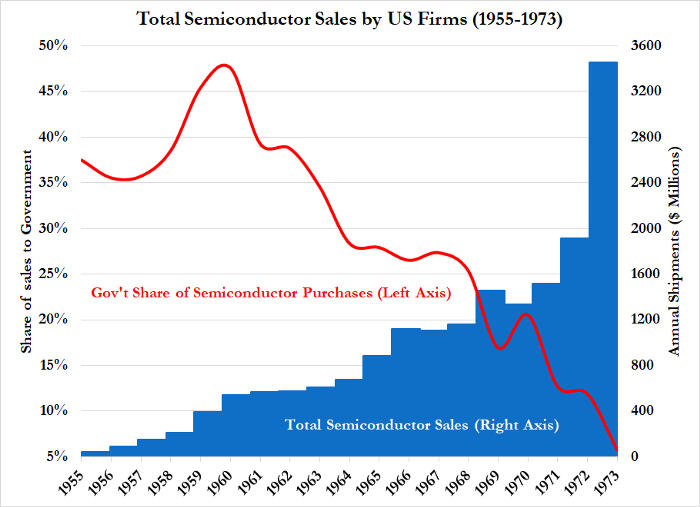

However, by the end of the 1960s, the industry had grown so much and so fast that government procurement — and thus the government’s ability to exercise quasi-regulations through things like second source contracts — had become relatively unimportant. While the existence of the semiconductor industry was predicated on military purchases in the late 1940s, military purchases represented less than a quarter of the market by the late 1960s.

The 1970s: Booming Commercial Markets

Between the boom in commercial applications and the absence of serious international competition, the 1970s represented a golden age for US domestic semiconductor firms, despite the relative unimportance of government procurement and guidance.

While industrial policy had catalyzed early innovations and capacity buildout, its relative absence in the 1970s was hardly noticed. To be sure, government purchasing still played some role in the 1970s, but private sector firms became more important purchasers, as they began to seriously integrate electronics into their supply chains. The beginning of mass-produced computers also had a symbiotic relationship with semiconductor development, as the needs of chips drove advances in packaging and integration.

In fact, the DoD’s priorities began to meaningfully diverge from the priorities of commercial clients. The DoD sought niche solutions to specifically military problems — especially the development of non-silicon-based or radiation hardened semiconductors — that had minimal commercial application. The government and semiconductor firms alike recognized that the industry no longer needed direct guidance, and the needs of each side began to diverge.

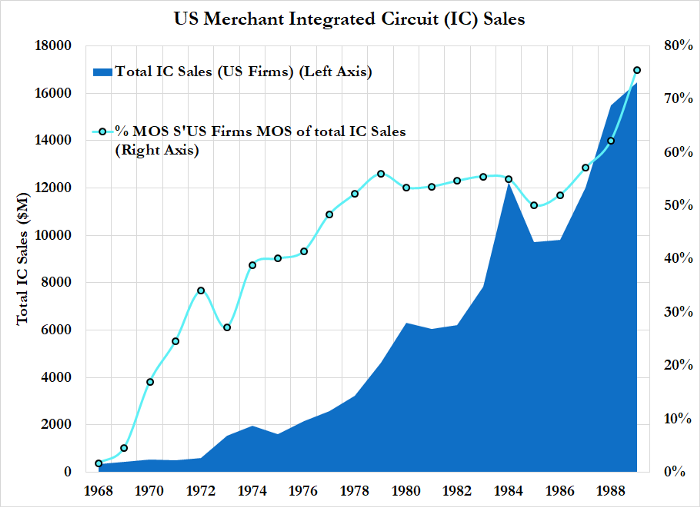

In the 1970s, the booming non-defense market meant successful small and large firms coexisted without much government support or coordination. Technology improvements turned into process improvements which in turn drove further technology improvements. New inventions — MOS ICs, the microprocessor, DRAM — propelled the industry to new heights, and recursively suggested further innovation paths.

In an environment of general prosperity and innovation, semiconductors came into their own as a general purpose technology with wide application throughout the economy. While large research labs and domestic fabrication represented substantial asset holdings, the absence of international competition and the booming market ensured that most investments ultimately worked out, whether in innovation or profit terms.

The 1980s: Fierce International Competition

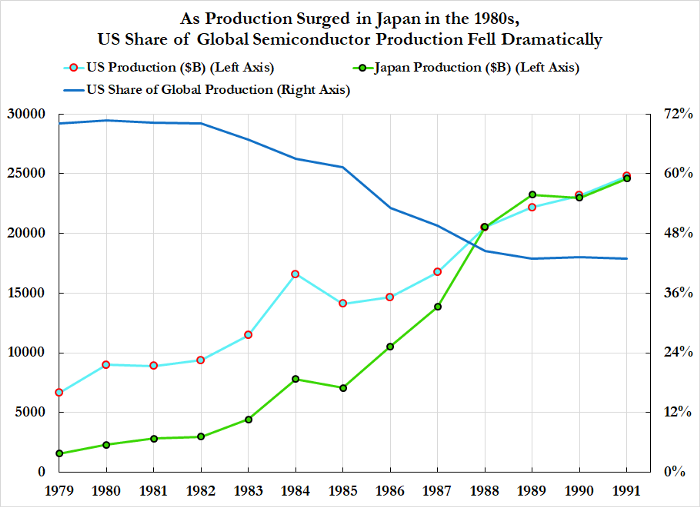

However, the optimism and largesse inculcated by this competitive environment was cut short in the 1980s, when the US lost market and technological dominance to Japanese firms guided by industrial policy from the Ministry of International Trade and Industry.

Japan had used the same types of policies that the US had to rapidly build out capacity and dominate global markets: centralized guidance, purchasing agreements, cheap financing. However, Japan pursued a slightly different strategy, focusing on honing the production of better-understood technologies for export markets, rather than military implementation alone. Once DRAM became a standard, and one of the largest single-markets within semiconductors as a sector, Japan quickly dominated.

While the US government had to create the initial market for semiconductors, Japan was able to structure its industrial policy around a fast-growing and already-existing market. As such, Japan was able to pursue much more heavy-handed policy than the US had — building out infrastructure and coordinating joint ventures in computing and semiconductors alike — knowing that there was a ready commercial market for its products. While the strategy of government support and coordination of investment was the same as that used by the US in the 50s and 60s, the tactics used to implement that strategy were tailored to the competitive environment of the 1980s.

The arrival of Japanese competition had a dramatic impact on US firms. Many exited the DRAM market permanently in the ensuing shakeout. The industry also responded by forming advocacy groups to coordinate production and lobby for tariffs and trade policy interventions. The Semiconductor Industry Association lobbied for protection from perceived Japanese “dumping,” while the Semiconductor Research Corporation (SRC) was formed to organize and fund academic research into semiconductor development that was relevant to commercial markets, but not the Department of Defense. SEMATECH was funded jointly by industry members and the DoD and originally intended to foment horizontal collaboration between firms, in the manner of earlier industrial policy. However, it quickly pivoted to a focus on vertical integration between suppliers and manufacturers, with a view to minimizing costs.

Lagging-edge semiconductors had already become commodities, interchangeable and judged on the basis of unit cost. The legacy vertically integrated firms began to disintegrate in the 1980s owing to a combination of technical and economic drivers. Given the economic situation in the US at the time, there was little appetite to invest in capacity in low-value-add activities in a much more competitive global market.

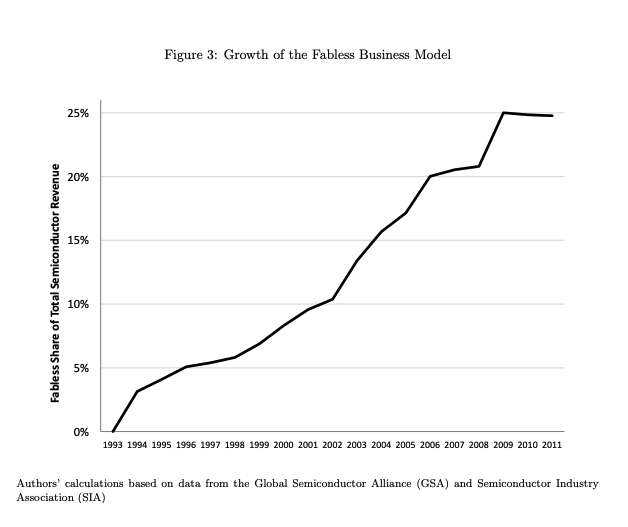

Instead, large firms rolled up whatever productive capacity small firms still had, and created large conglomerates. The emergence of MOS transistors as the industry’s dominant design made dedicated manufacturing ‘foundries’ economical, as firms began adopting similar design principles. The ensuing vertical disintegration led to the emergence of large, vertically integrated conglomerates co-existing with small design-focused ‘fabless’ firms, who produced designs but not chips. In theory, this preserved flexibility for these ‘fabless’ firms to pursue innovative design strategies while minimizing overhead costs. The US industry’s embrace of this strategy led to a revival of market share in the 1990s as US firms pioneered new product classes and Japanese firms faced competition from Korean entrants.

From a policy side, the US never returned to domestic industrial policy. Rather, the success of foreign industrial policy programmes was met with domestic consolidation, monopolization, trade protectionism, and funding for scientific research.

The 1990s: Science Policy, Not Industrial Policy

As the industry confronted the technological and competitive changes of the 1980s, the 1990s saw the culmination of the US’ new ‘science policy’ approach. Rather than return to industrial policy — whether the kind the US had used in the past, or an approach more influenced by MITI — the 1990s saw the introduction of “science policy” as the new paradigm for government action in semiconductor manufacturing. Science policy focused on fostering public-private partnerships with individual firms, the closer integration of industry R&D with academic R&D, a broad division of research labor, and an industry structure that allowed innovative firms to run asset-light.

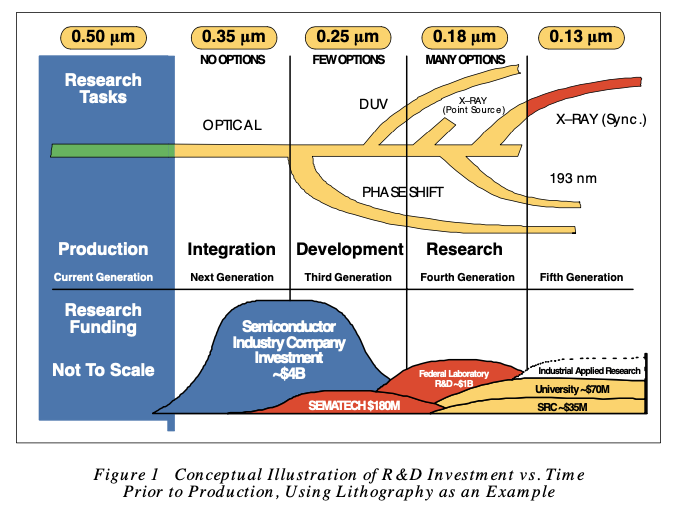

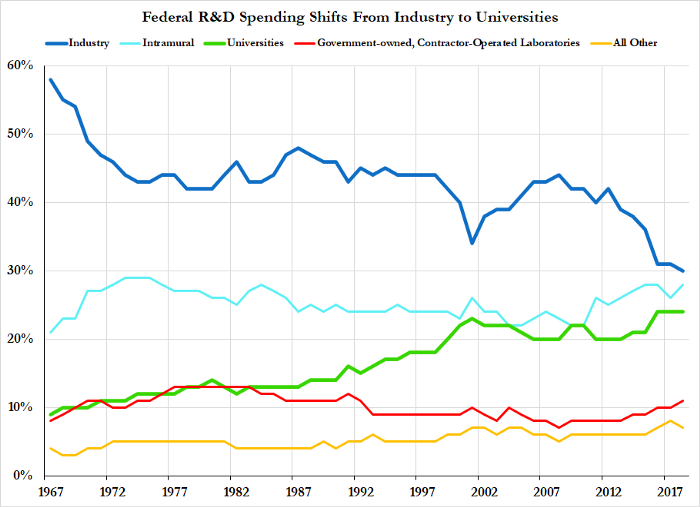

The goal of policy shifted from creating a robust competitive ecosystem with strong supply chains, to creating public-private institutions to coordinate complex handoffs between researchers, fabless design firms, equipment suppliers and large-scale “champion firms.” This way, no firms would need to spend more than absolutely necessary on R&D — preserving global cost competitiveness — while the government would also avoid large-scale investment spending. The chart below, from the 1994 National Technology Roadmap for Semiconductors produced by the Semiconductor Industry Association gives a flavor of the strategy behind science policy:

The central theme of the “science policy” arrangement was efficiency in the narrow sense of non-redundancy. Early industrial policy had focused on redundancy and duplication to bring innovations to every part of the supply chain as quickly as possible. Small and large firms alike had managed their own production, and second-source contracts ensured that viable processes spread quickly through the ecosystem of firms. While the earlier industrial policy strategy had greatly accelerated the pace of innovation, and ensured that whole supply chains would be robust to the failure of individual companies, it did mean a lot of duplication in investment. Despite the fact that this approach helped drive the adoption of process improvements, static shareholder value maximization dictated that this duplication was too economically wasteful.

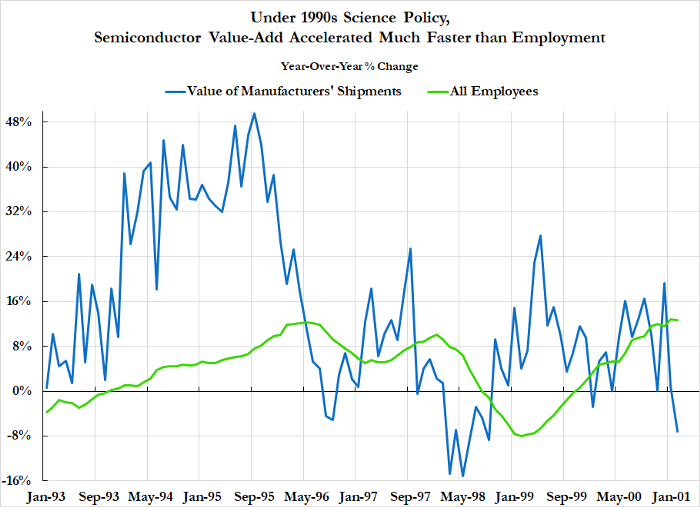

Whereas industrial policy of prior decades fostered large-scale employment — a core driver of innovation — 1990s “science policy” avoided this approach for the sake of minimalistic efficiency. Workers changed firms frequently, and learning-by-doing represented a central pathway to innovation. In fact, the “untraded interdependencies’’ literature within Economic Geography evolved in part to explain how important the intermingling of large groups of semiconductor industry workers were to the industry’s fast-paced innovation. While maintaining a large volume of workers in a single location was key to many advancements, in this new competitive environment, it came to be seen as wasteful. Labor was a substantial portion of unit cost, and firms believed that if they could strategically downsize, global competitiveness would return.

In the early days of semiconductors, with relatively price-insensitive government contracts comprising a substantial portion of total sales, this inefficiency was seen as the cost of innovation. As foreign competitors came online, and a cost-conscious commercial market became the main buyer of semiconductors, this duplication of capabilities seemed like a pure cost center with few benefits to many firms. Profitability concerns meant ensuring that as little work as possible was duplicated, in order to keep the cost side in check against a highly price-sensitive competitive environment. This created a collective action problem, where it was in every individual firm’s interest to cut spending, but doing so further worsened US firms ability to innovate.

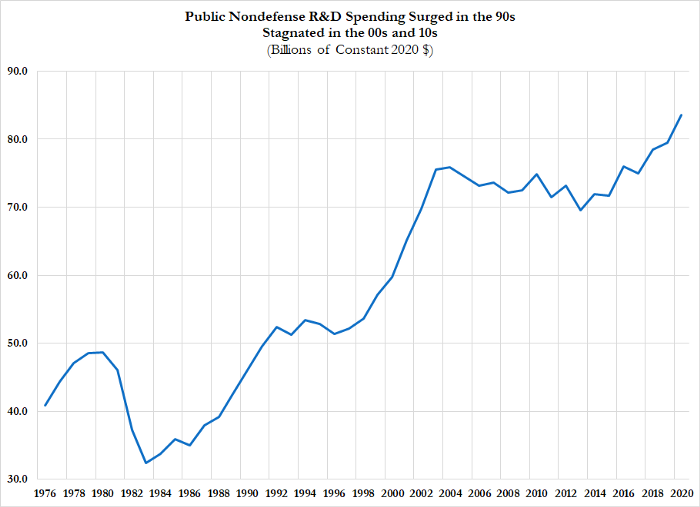

In the 1990s, rather than return to industrial policy, the US government opted for the much cheaper program of science policy. Ideally, “science policy” would allow the government to coordinate firms’ contradictory desire to economize without falling further behind technologically. However, in keeping with the spirit of the time, the US government was also trying to economize, and would not provide the large-scale fiscal support needed for industrial policy to be successful in the new competitive environment.

Instead, the government would spend a much smaller amount of money, and attempt to inaugurate a division of labor that would allow all participants to cut costs in pursuit of profitability without sacrificing the technological frontier. To do this, it funded R&D in academic research laboratories on one side and industry groups to translate that research into commercial capabilities on the other. In a way, this further devalued the R&D investments of individual firms, as advancements created only minimal competitive advantages. Rather than an ecosystem of firms with overlapping supply chains, this structure created a division of labor where each firm or institution owned a separate part of an apparently-divisible innovation process. At the same time, more permissive trade policy and better shipping capabilities made it even more economical for leading-edge firms to go “fabless,” the most asset-light strategy possible. The goal was to recapture the technological frontier on the cheap for the public and private sector alike by solving a collective action problem and reducing redundancies in the system as a whole.

In the near term, this strategy worked! The US successfully regained technological superiority by the late 1990s amidst a general domestic boom in investment in semiconductors and technology in general. The sector was able to innovate while remaining internationally competitive without the large-scale fiscal support of domestic industrial policy. Most individual firms concentrated R&D heavily on the next node or two in development of the production process, while longer-range research was organized by government grant-funded academic researchers. Industry groups stepped in to translate this academic research to commercial actors, and the cost of duplicated labor in R&D as well as production was largely eliminated. Large centralized research laboratories hollowed out, and supply chains became much more narrowly targeted to the research demands of a few core firms.

The 2000s: Dot Com Crash and Diminishing Returns

However, the near-term success of this strategy came at a steep long-term cost. Redundancies in labor and capital helped ensure that firms were able to quickly internalize process improvements while also training the next generation of engineers and technologists. While this duplication may have been “redundant” from the perspective of static maximization of shareholder returns in a single period, it was critical to ensuring long-run innovative trajectories. “Eliminating redundancies” and “increasing fragility” are two sides of the same coin.

Eating your seed corn and underinvesting to juice profitability and competitiveness only works once. In the long term, that underinvestment in labor and capital shows up somewhere, whether on balance sheets, innovative capacity, or both. As it stands, the US is in danger of losing its advantage in leading-edge design, and has already lost much of its supremacy in leading-edge fabrication to TSMC. Assigning one part of the investment process to each company may make every individual company’s balance sheet appear more robust, but the industry as a whole has become much more fragile through persistent underinvestment. Decades of minimized labor costs have shrunk the pool of skilled technologists and engineers, while decades of underinvestment in capacity has hampered domestic firms’ ability to respond to the present shortage.

The industry’s present problems are natural long-run results of the science policy strategy that appeared so successful in the late 1990s and early 2000s. The drive to consolidation and vertical integration that focused long-range research in academic labs, mammoth “champion firms” and asset-light “fabless” innovators has created a rickety competitive ecosystem.

Since these champion firms make up such a large proportion of the competitive landscape, their R&D priorities and intermediate input needs set the terms for the industry as a whole. Major buyers like Intel are able to implicitly or explicitly use their relative monopsony power to structure supply chains narrowly around their needs. When the needs of the broader economy shift — as they have since the onset of the pandemic — these fragile supply chains are easily caught offsides. This fragility is a clear result of a supply chain optimized for short-term profitability and elimination of redundancy, not one geared to the needs of the economy as a whole.

Champion firms also, whether intentionally or not, shape the path of technological development around their own financial needs and plans. As such, the policy mix of R&D at academic labs combined with tax optimization and unit cost minimization at private firms has created substantial technological path dependency. At the same time, these champion firms are “too big to fail” in a technological sense: if they miss a process improvement, the absence of same-size domestic competitors means that the industry as a whole misses out on that advancement. In a meaningful sense, technology policy as a whole is delegated to private actors.

The 2010s: ‘Fabless’ Firms, R&D, and Offshoring

Strange inconsistencies and feedback loops have also begun to appear in the path from R&D to production. Key to the science policy strategy was the analytical and economic separation of innovations in intellectual property from innovations in the production process. In layman’s terms, policy privileged research, design and ideas over implementation, production, and investment. The rise of “fabless firms” that leveraged the process improvements of foreign fabrication plants was a direct consequence of this strategy.

However, prioritizing R&D can paradoxically reduce the pace of innovation. Subsidizing R&D alone is no different from incentivizing offshoring: policy rewards the development of intellectual property, not ownership of physical assets. The problem is, process improvements come from the implementation of new technologies embodied in new physical assets. “Learning by doing” is a critical part of technical innovation. A good engineer looks to innovate at every step in the production process at every point in the supply chain. Offshoring and outsourcing production of cutting-edge designs introduces a black box around process that can leave things like uneconomical yields impossible to correct for. Focusing on R&D alone offshores the development of these process improvements and starves domestic producers while preventing the labor force from developing new skills.

This constraint is particularly visible in the fact that academic research has drifted from the path of commercialization and created lock-ins along certain paradigms of innovation. Given that academic research is often structured around questions far from present production, it is not surprising that it is sometimes unable to provide insights into alternative applications for existing technologies, or alternative process-driven innovation paths. Since science policy leaves this group in charge of long-term innovation strategies for the industry as a whole, this blind spot cannot be ignored. In fact, the failure of Moore’s Law and the shift to unique designs for heterogeneous chips in many applications shows well how innovations often imply multiple paths of technological development at any point.

A decades-long failure to invest in industrial capacity and employment has created a situation where US firms are highly reliant on outside fabrication plants. Current plans to invest in a domestic TSMC-owned fabrication plant represent an attempt to simply buy our way out of the problem and do not reduce our reliance on a single-sourced supplier for leading edge designs. Instead, we should look to the history of industrial policy in the early era of semiconductor production to regain the technological frontier, and push innovation forward at every point in the supply chain.

Today: Policy Implications and Strategy

Now that the US faces a shortage of lagging-edge semiconductors and diminished innovative capacity, policymakers are considering serious interventions. While it is probably too late to address the present shortage, the time to prevent the next shortage is now. Together, the broad bipartisan support for infrastructure spending, the imperative of building back better after the pandemic, and national security anxieties about semiconductor sourcing should encourage policymakers that the time is right for ambitious reforms. As should be clear from the above, the history of industrial policy in semiconductors offers many lessons about how best to create high employment, technological innovation, and robust domestic supply chains.

History shows that science policy is a necessary complement to industrial policy, but insufficient on its own. Coordinating R&D is a necessary part of any solution, but hardly the entire solution. To capture process improvements and ensure that the labor force is sufficiently skilled to operate at the technological frontier, the industry needs to see consistent capacity expansion. However, as we have shown before, private firms are markedly hesitant to make uncertain investments in a low-demand environment. Industrial policy, through a combination of government purchasing and financing guarantees, direct funding, and other approaches, is the only way to provide sufficient liquidity to the industry to ensure that capacity expands quickly enough to keep the sector on the technological frontier. At the same time, the government has the fiscal ability to keep domestic firms producing lagging-edge commodity semiconductors viable for national security and supply chain resilience reasons. Outsourcing industrial policy to shareholder maximization has not worked out in the long term.

It is also crucial to realize that strong economy-wide demand and thus tight labor markets generally, but also tight labor markets in semiconductor production in particular, are crucial for these policies to be successful. A strong government-led investment buildout will create good jobs across a variety of experience and skill levels. This will create both a highly-skilled workforce as well as ample opportunities for the kind of learning-by-doing that drives meaningful process improvements. In high-skill high-capital intensity industries, labor behaves almost like another form of capital good, paying clear dividends on investment. However, in the absence of sufficient employment opportunities, those specialized skills disappear as workers move on to other industries. However, this is not to say that labor upskilling is sufficient: legislation that creates training programs without also creating the necessary jobs and investment alongside will prove quickly self-defeating.

Some may balk at the scale of commitment needed to fund industrial policy in semiconductors and other key sectors. It is a vast market with enormous price tags, modern fabrication plants cost billions of dollars. However, semiconductors are a critical general purpose technology that enter into almost every supply chain. Large-scale industrial policy can keep bottlenecks from dragging on economic growth while simultaneously creating a robust domestic supply chain for our national security needs. Relative to the initial investments in semiconductor technology, a return to industrial policy would be much more expensive, but with even larger returns. As part of a four trillion dollar infrastructure or bipartisan supply chain bill, revitalizing lagging and leading-edge industries and returning to a robust competitive ecosystem is too good of an investment to pass up.

Our policy goals are simple: the development of an expanded industrial policy toolkit to inculcate innovation, tight domestic labor markets, and maintenance of critical supply chain infrastructure. Semiconductors as an industry are an ideal starting point for figuring out these policy tools, owing to the scale of investment and jobs needed. Rebuilding a robust innovative environment will also help durably return the US to the technological frontier and create employment and investment that will pay dividends for years to come. Semiconductors play a critical role in a modern industrial economy, and their technological path is too important to be guided by short-term profitability concerns. The government has the opportunity and the responsibility to use industrial policy to stop the next shortage before it happens, while ensuring that the US maintains its place at the technological frontier.